Accounting & SEC Update: 2023 Third Quarter

Related

Never miss a thing.

Sign up to receive our insights newsletter.

During Weaver’s third quarter Accounting & SEC Update, Weaver’s IT and Accounting Advisory leaders shared their in depth discussion on the new Securities and Exchange Commission (SEC) cybersecurity rules and the use of generative AI.

The new SEC regulations are helpful to understand whether or not your company is public. The disclosures, incident response requirements, and other measures included in the rule promote a healthy cybersecurity ecosystem that can help mitigate exposure to risk.

The growing usage and monitoring needed for generative AI products such as OpenAI’s ChatGPT and others, pose unique challenges and opportunities that can create efficiencies and deeper insights for users.

SEC Cybersecurity Rules

Following a three to two vote, the Securities and Exchange Commission adopted new rules surrounding cybersecurity risk management, strategy, governance, and incident reporting disclosures that were announced on July 26, 2023. The rules are codified in Regulation S-K and requires public companies to file cybersecurity incident disclosures within four days of materiality determination, and to provide a substantial amount of details on their cybersecurity program within their annual Form 10-K filing.

All companies filing Form 10-K for periods ending on or after December 15, 2023, are required to include Item 1C where they describe their cybersecurity program and practices to comply with the new SEC Cybersecurity Rules. Companies should begin compiling information, evaluating their practices and preparing a draft of their disclosure documentation now so they are prepared at year end.

Form 6–KFPIs must furnish on Form 6–K information on material cybersecurity incidents that they disclose or otherwise publicize in a foreign jurisdiction, to any stock exchange, or to security holders.

| Item | Summary Description of the Disclosure Requirement |

|---|---|

| Regulation S–K Item 106(b)— Risk management and strategy | Registrants must describe their processes, if any, for the assessment, identification, and management of material risks from cybersecurity threats, and describe whether any risks from cybersecurity threats have materially affected or are reasonably likely to materially affect their business strategy, results of operations, or financial condition. |

| Regulation S–K Item 106(c)—Governance | Registrants must: •Describe the board’s oversight of risks from cybersecurity threats. •Describe management’s role in assessing and managing material risks from cybersecurity threats. |

| Form 8–K Item 1.05— Material Cybersecurity Incidents | Registrants must disclose any cybersecurity incident they experience that is determined to be material, and describe the material aspects of its: •Nature, scope, and timing; and •Impact or reasonably likely impact.An Item 1.05 Form 8–K must be filed within four business days of determining an incident was material. A registrant may delay filing as described below, if the United States Attorney General (“Attorney General”) determines immediate disclosure would pose a substantial risk to national security or public safety.Registrants must amend a prior Item 1.05 Form 8–K to disclose any information called for in Item 1.05(a) that was not determined or was unavailable at the time of the initial Form 8–K filing. |

| Form 20–F | FPIs must: •Describe the board’s oversight of risks from cybersecurity threats. •Describe management’s role in assessing and managing material risks from cybersecurity threats. |

Source: Source: Federal Register – Cybersecurity Risk Management, Strategy, Governance, and Incident Disclosure. August 4, 2023

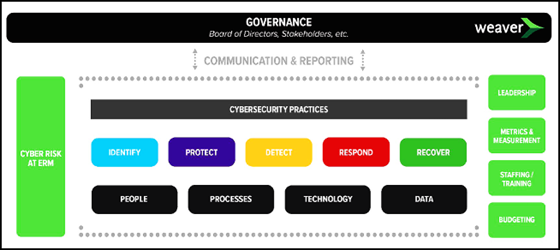

Form 10-K and Governance

When documenting and evaluating the governance of a cybersecurity program at the organization level on Form 10-K, it’s helpful to look at the people, processes, technology, and data that collectively make up your ecosystem. Existing control frameworks and practices can be the starting point for preparing the information required by the SEC.

The oversight of a cybersecurity program requires robust documentation. It must explain how the control environment and business practices maintain system and data access within the ecosystem. Board members are expected to provide oversight of the cybersecurity program to ensure the health of the company and mitigate exposure to potential risks.

The use of third-party experts—such as attorneys, outside cybersecurity advisors, and insurers—should be included in the documentation within your plan. Be particularly cognizant of integration points—such as through ancillary support systems—that may pose a higher exposure risk.

Form 8-K & Materiality

The new cybersecurity rules require that companies report material incidents on Form 8-K within four days of when the determination of materiality is made. This requirement is already effective and needs to be followed if an incident occurs.

Determining materiality is subjective and companies and individuals are likely to define and measure materiality in different ways. While it isn’t a new activity, having the time pressure and the potential for public scrutiny adds an additional weight to it now.

Pro Tip: Weaver’s Incident Response Checklist is a free tool available for download that can set the stage for documenting your Incident Response actions.

When a cybersecurity event occurs—a potential breech, unauthorized access, or an errant email for example—it’s important to ask numerous questions to evaluate the event both for impact and necessary response, as well as for potential disclosure if the situation is determined to be of a material nature.

- What happened?

- Who did it impact?

- What was the time period?

- What are the downstream effects?

- Did it happen inside our infrastructure?

- Or were we part of a supply chain?

- What are the costs involved?

- Fixed Costs – Sending out notices, cyber insurance premium costs, outside consultants or legal experts

- Opportunity Costs – Is there a loss of revenue? A loss of our customer base?

Risk Assessments

In a discussion about cybersecurity frameworks and disclosures, it is important to circle back and acknowledge that an enterprise-level risk assessment is an essential part of a cybersecurity ecosystem.

A comprehensive risk assessment includes documenting the framework used, roles and responsibilities, where risks live, the touchpoints between Operations and IT Security professionals, and the elements that have potential exposure as well as those that mitigate risks. The information collected and documented—the artifacts—should collectively live in a Cybersecurity Program Charter that connects all the dots.

Importantly, performing periodic walk-throughs of your internal Incident Response checklist, call trees, and other tools will help to ensure your readiness should an incident occur, and aid you in identifying any existing gaps.

Emerging Technology – Generative AI

Generative artificial intelligence continues to have a prominent presence in the workplace. The launch of OpenAI’s ChatGPT a year ago spawned the rapid development and release of a host of competitive platforms and supplemental plug-ins. There are standalone cloud-based systems and integrations in our existing applications on AI-enabled tools daily.

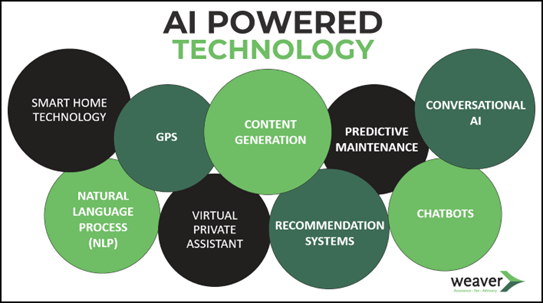

Assistive AI to Generative AI

We have been living with AI-assistive technology for several years—GPS, home automation, and autonomous cars to name a few. This new generation of AI is generative—it receives our requests (prompts), analyzes its vast library of information and data, and creates responses that never existed before.

Users can ask for highly specific responses by assigning the AI roles, such as telling it who the information would be coming from, who the audience is, the tone of voice, the desired length of reply, and anything else they feel is relevant. When the initial response—or the 12th—doesn’t hit the mark, the user can continually hone the given reply until they are satisfied. The interactive ability of generative AI can create very useful results that can then be used as a template or reviewed for accuracy.

Opportunities with Generative AI

While there are many potential drawbacks that must be considered as discussed further below, the potential for using generative AI comes from it not only creating novel responses, but also with the efficiencies it can provide. If you give it a list of bullet points, it can generate an outline or an article about the topics, even making connections between them for a cohesive human-like result.

Need a memo? Report? Or to evaluate potential accounting treatments of a transaction? These are all possible. Writing control procedures, job descriptions, or a simple chart of accounts can give users a jumping off point that otherwise could have taken them hours to come up with.

For illustrative purposes, consider how when preparing external financial reporting statements, a user may ask a private internal instance of a generative AI platform to create a management discussion and analysis (MD&A) section that reports line-item fluctuations, identifies the drivers to the changes (if enough detail is provided), and produces KPI values. Any response produced should be heavily vetted and edited to ensure that the final MD&A accurately represents management’s perspective.

Risks with Generative AI – Hallucinating

The elephant-in-the-room risk of generative AI is that it can make up information that is read as if it is factual. Fabricating results—often called hallucinating—happens because it is ‘reading’ articles and databases full of details and making ‘logical’ assumptions of what they mean.

When inaccurate data is added to the database, then it too can be regurgitated into its new replies. Understanding and tracking down the sources used in AI responses is required and all responses from AI should be fact-checked before they’re relied upon.

Risks with Generative AI – Data Security & Privacy

Another major concern of generative AI is data security and privacy. Sharing customer or client data—or your own company’s data—would be detrimental to your relationship and could be easily done if users aren’t properly guided on AI usage and privacy concerns. Unintentional data breaches like this can be mitigated through training and limiting access to AI through public platforms.

Using private instances of OpenAI, Microsoft CoPilot, and others will help to shield data from access while also allowing the sharing of prompts and output results. Other connections such as using plug-ins and apps within the generative AI app, or using AI’s within CRMs, ERPs, and other systems present additional exposure that must be evaluated and tested.

AI Governance

The people who work to build artificial intelligence systems are the strongest advocates for AI governance. Human oversight is needed for utilizing and relying upon AI as well as to ensure that replies are properly vetted and fact-checked. Companies need to consider when disclosure about the use of AI is required or appropriate.

State and local governments, industries trade groups, and countries are evaluating the usage and auditing of AI, and more governance is expected as systems mature. The European Union EU AI Act and the U.S. AI Bill of Rights are just two of the governance documents under development.

Next Steps

If you need to create or update your Incident Response Plan, test your cyber preparedness, or would like additional guidance on preparing your SEC disclosures, assessing your cybersecurity program, or considering the use of generative AI, Weaver’s IT Advisory Services team can help. Contact us to request a free consultation.

©2023

Weaver’s Accounting and SEC Update

Sign up for our quarterly series to stay informed!